|

EE445M RTOS

Taken at the University of Texas Spring 2015

|

|

EE445M RTOS

Taken at the University of Texas Spring 2015

|

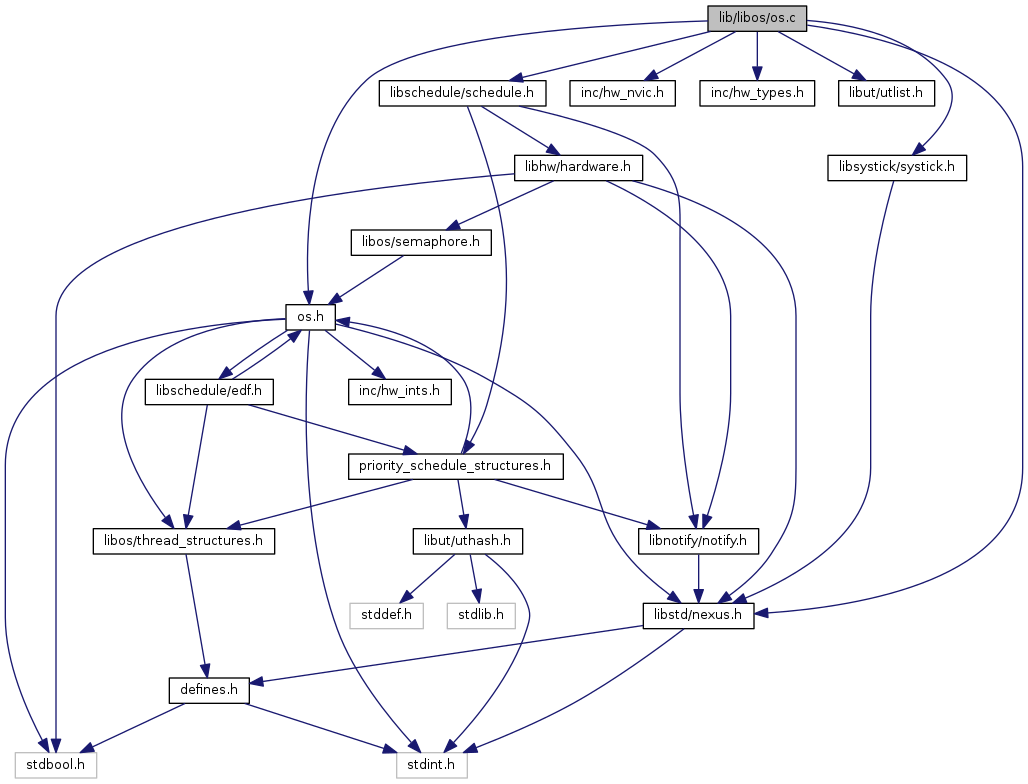

#include "os.h"#include "inc/hw_nvic.h"#include "inc/hw_types.h"#include "libstd/nexus.h"#include "libut/utlist.h"#include "libsystick/systick.h"#include "libschedule/schedule.h"

Go to the source code of this file.

Functions | |

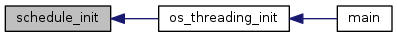

| void | os_threading_init () |

| int8_t | get_os_num_threads () |

| tcb_t * | os_add_thread (task_t task) |

| tcb_t * | os_remove_thread (task_t task) |

| int32_t | os_running_thread_id () |

| tcb_t * | os_tcb_of (const task_t task) |

| void | os_launch () |

| always void | _os_reset_thread_stack (tcb_t *tcb, task_t task) |

| always void | scheduler_reschedule (void) |

| void | SysTick_Handler () |

| void | PendSV_Handler () |

| void | os_suspend () |

| void | schedule (task_t task, frequency_t frequency, DEADLINE_TYPE seriousness) |

| void | schedule_aperiodic (pisr_t pisr, HW_TYPE hw_type, hw_metadata metadata, microseconds_t allowed_run_time, DEADLINE_TYPE seriousness) |

| void | schedule_init () |

| sched_task_pool * | schedule_hash_find_int (sched_task_pool *queues, frequency_t target_frequency) |

| void | edf_init () |

| void | edf_insert (sched_task *task) |

| tcb_t * | edf_pop () |

| sched_task * | edf_get_edf_queue () |

| void | _os_choose_next_thread () |

Variables | |

| static int32_t | OS_PROGRAM_STACKS [SCHEDULER_MAX_THREADS][100] |

| volatile sched_task * | executing |

| volatile sched_task_pool * | pool |

| volatile uint32_t | clock = 0 |

| volatile sched_task | EDF [SCHEDULER_MAX_THREADS] |

| volatile sched_task * | EDF_QUEUE = NULL |

| bool | OS_FIRST_RUN = true |

| bool | OS_THREADING_INITIALIZED |

| uint8_t | OS_NUM_THREADS |

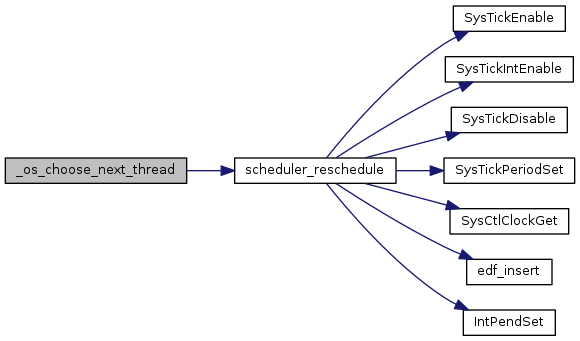

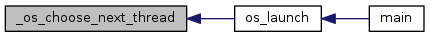

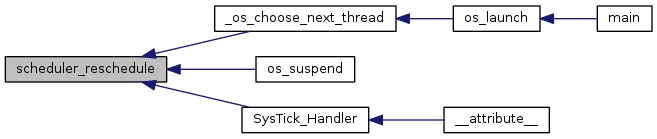

| void _os_choose_next_thread | ( | ) |

Definition at line 544 of file os.c.

References scheduler_reschedule().

Referenced by os_launch().

| sched_task* edf_get_edf_queue | ( | ) |

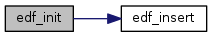

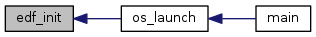

| void edf_init | ( | ) |

Definition at line 475 of file os.c.

References DL_EDF_INSERT, edf_insert(), sched_task_pool::next, sched_task_pool::queue, and SCHEDULER_QUEUES.

Referenced by os_launch().

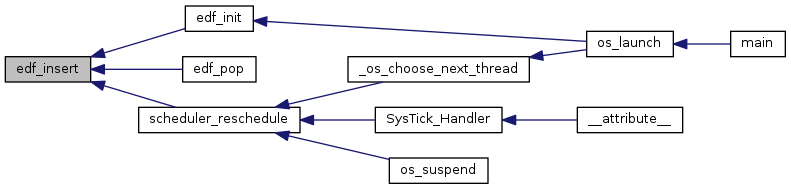

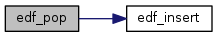

| void edf_insert | ( | sched_task * | ) |

Add by insertion sort the specified task into the EDF queue

Definition at line 491 of file os.c.

References sched_task::absolute_deadline, DL_EDF_INSERT, DL_EDF_PREPEND, EDF_QUEUE, and sched_task::pri_next.

Referenced by edf_init(), edf_pop(), and scheduler_reschedule().

| tcb_t* edf_pop | ( | ) |

Definition at line 512 of file os.c.

References sched_task::absolute_deadline, sched_task_pool::deadline, edf_insert(), EDF_QUEUE, sched_task::next, sched_task_pool::next, sched_task::pri_next, sched_task_pool::queue, SCHEDULER_QUEUES, SYSTICKS_PER_HZ, and sched_task::tcb.

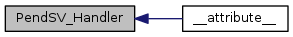

| void PendSV_Handler | ( | void | ) |

Definition at line 291 of file os.c.

References HWREG, NVIC_ST_CURRENT, and os_running_threads.

Referenced by __attribute__().

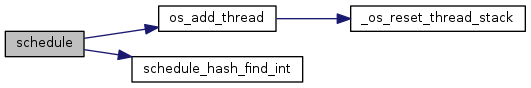

| void schedule | ( | task_t | task, |

| frequency_t | frequency, | ||

| DEADLINE_TYPE | seriousness | ||

| ) |

Definition at line 386 of file os.c.

References sched_task::absolute_deadline, CDL_APPEND, CDL_DELETE, CDL_PREPEND, clock, sched_task_pool::deadline, MAX_SYSTICKS_PER_HZ, sched_task_pool::next, NULL, os_add_thread(), postpone_death, sched_task_pool::prev, sched_task_pool::queue, schedule_hash_find_int(), SCHEDULER_QUEUES, SCHEDULER_UNUSED_QUEUES, SCHEDULER_UNUSED_TASKS, sched_task::seriousness, sched_task::task, and sched_task::tcb.

Referenced by main().

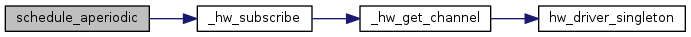

| void schedule_aperiodic | ( | pisr_t | , |

| HW_TYPE | , | ||

| hw_metadata | , | ||

| microseconds_t | , | ||

| DEADLINE_TYPE | |||

| ) |

Schedule a pseudo-isr to be executed when a hardware event described by HW_TYPE and hw_metadata occurs.

Definition at line 435 of file os.c.

References _hw_subscribe().

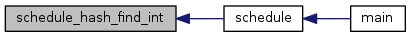

| sched_task_pool* schedule_hash_find_int | ( | sched_task_pool * | queues, |

| frequency_t | target_frequency | ||

| ) |

Definition at line 456 of file os.c.

References sched_task_pool::deadline, sched_task_pool::next, and NULL.

Referenced by schedule().

| void schedule_init | ( | ) |

Initialize all deep datastructures used by libschedule.

Definition at line 445 of file os.c.

References DL_PREPEND, SCHEDULER_MAX_THREADS, SCHEDULER_TASK_QUEUES, SCHEDULER_TASKS, SCHEDULER_UNUSED_QUEUES, and SCHEDULER_UNUSED_TASKS.

Referenced by os_threading_init().

|

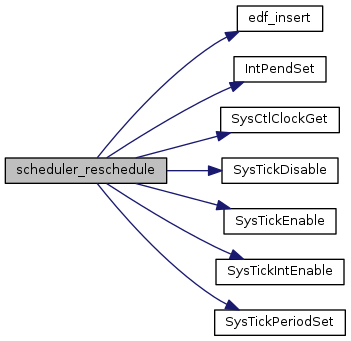

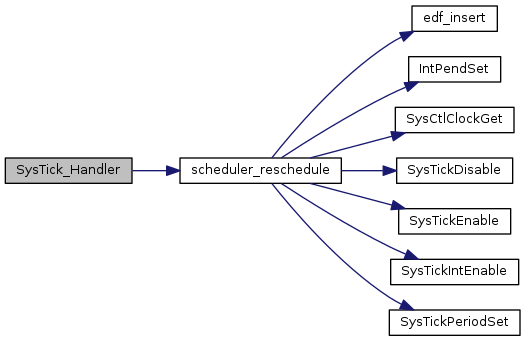

inline |

Definition at line 237 of file os.c.

References sched_task::absolute_deadline, clock, sched_task_pool::deadline, edf_insert(), EDF_QUEUE, FAULT_PENDSV, HWREG, IntPendSet(), sched_task::next, sched_task_pool::next, NVIC_ST_CURRENT, OS_FIRST_RUN, OS_NEXT_THREAD, sched_task::pri_next, sched_task_pool::queue, SCHEDULER_QUEUES, SysCtlClockGet(), SysTickDisable(), SysTickEnable(), SysTickIntEnable(), SysTickPeriodSet(), and sched_task::tcb.

Referenced by _os_choose_next_thread(), os_suspend(), and SysTick_Handler().

| void SysTick_Handler | ( | void | ) |

Definition at line 282 of file os.c.

References scheduler_reschedule().

Referenced by __attribute__().

| volatile uint32_t clock = 0 |

Definition at line 20 of file os.c.

Referenced by schedule(), and scheduler_reschedule().

| volatile sched_task EDF[SCHEDULER_MAX_THREADS] |

| volatile sched_task* EDF_QUEUE = NULL |

Linked list of tasks (with different periods) ready to be run.

Definition at line 27 of file os.c.

Referenced by edf_get_edf_queue(), edf_insert(), edf_pop(), and scheduler_reschedule().

| volatile sched_task* executing |

| bool OS_FIRST_RUN = true |

Definition at line 29 of file os.c.

Referenced by scheduler_reschedule().

| uint8_t OS_NUM_THREADS |

Definition at line 32 of file os.c.

Referenced by get_os_num_threads(), and os_remove_thread().

|

static |

A block of memory for each thread's local stack.

Definition at line 16 of file os.c.

Referenced by _os_reset_thread_stack(), and os_threading_init().

| bool OS_THREADING_INITIALIZED |

Definition at line 31 of file os.c.

Referenced by os_threading_init().

| volatile sched_task_pool* pool |